We found step 3 was necessary to getting our prompts to generalize which is why I recommend having multiple query images, but maybe this isn't needed for your application.IDK. use some additional training data to check which candidate is the best.

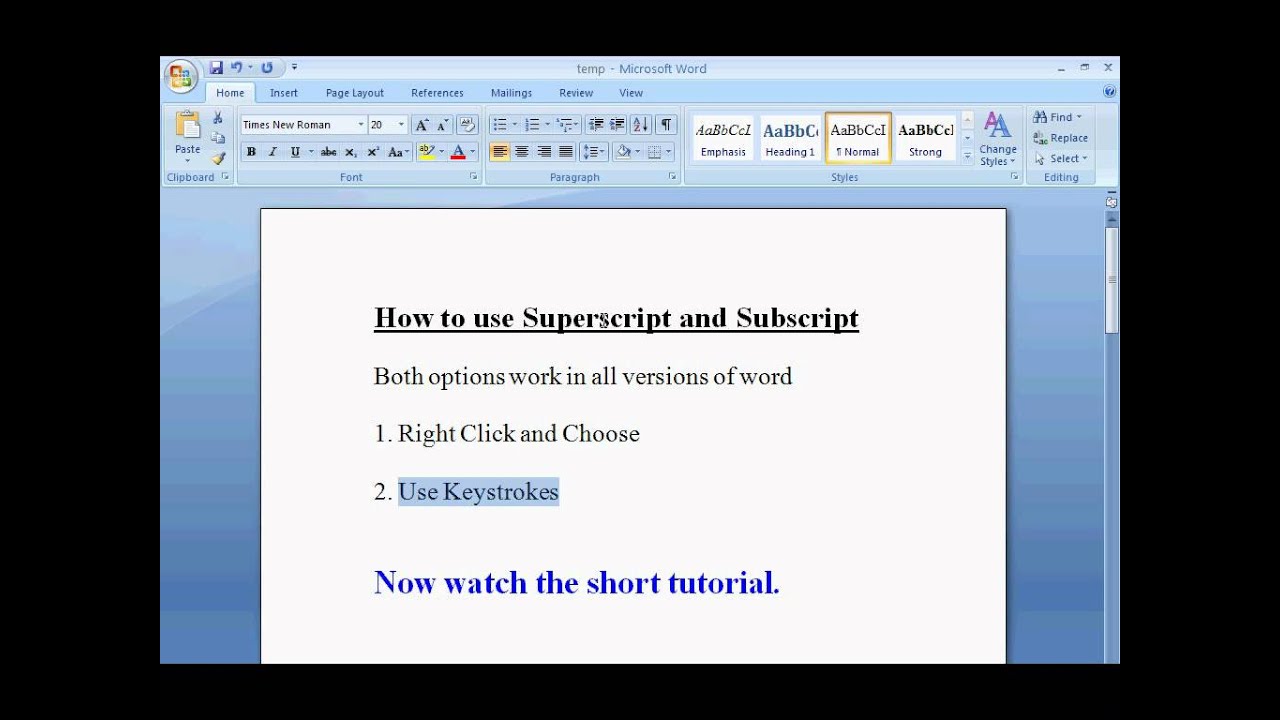

#AUTOPROMPT IN WORD HOW TO#

The hotflip_attack function to find the updates. Hi, I don't know how to use the autopage numbering in word on a paper that is already written, I would like some help with that please.

The GradientStorage object that registers the backwards hook to store the gradients of the loss w.r.t.Pretty much everything you need is contained in.

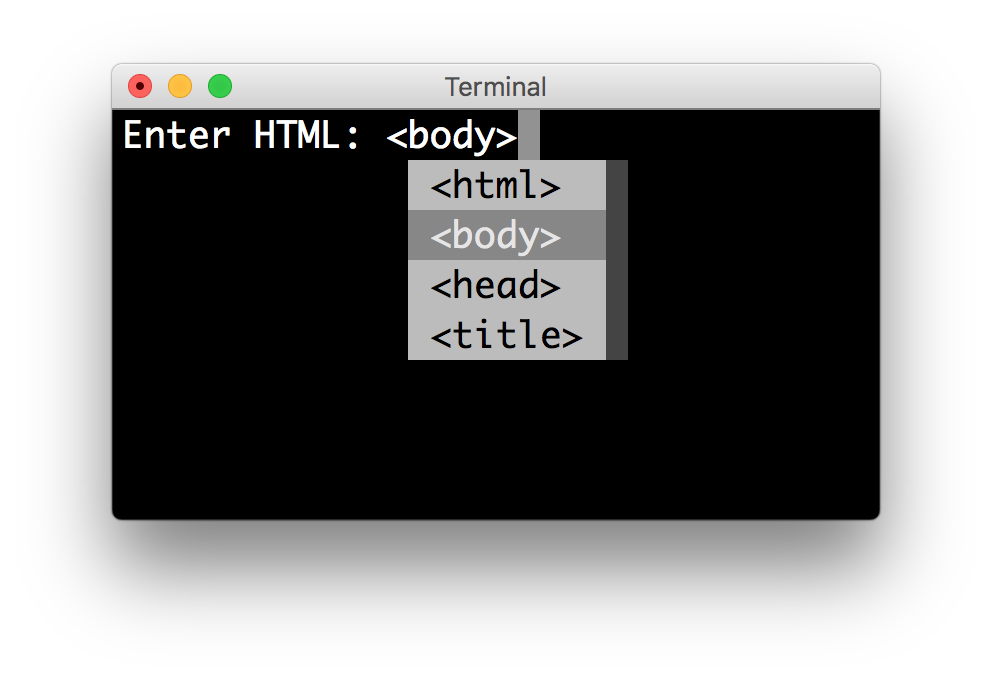

#AUTOPROMPT IN WORD CODE#

I think the best way to use AutoPrompt for your application would be to copy the relevant lines of code to the open_clip training script. ".Hi is an interesting idea! No guarantees, but this definitely could work, although you will probably get better prompts with multiple query (not sure this is the right word) images.

#AUTOPROMPT IN WORD UPDATE#

We also excluded relations P527 and P1376 because the RE baseline doesn’t consider them. Trimmed the original dataset to compensate for both the RE baseline and RoBERTa.trex: We split the extra T-REx data collected (for train/val sets of original) into train, dev, test sets.original_rob: We filtered facts in original so that each object is a single token for both BERT and RoBERTa.original: We used the T-REx subset provided by LAMA as our test set and gathered more facts from the original T-REx dataset that we partitioned into train and dev sets.There are a couple different datasets for fact retrieval and relation extraction so here are brief overviews of each:

The datasets for sentiment analysis, NLI, fact retrieval, and relation extraction are available to download here The first time a user drags and drops a document into a document library that has a required metadata column, even if the column has a default value, the document will land as checked out until.

0 kommentar(er)

0 kommentar(er)